Imagine your phone knowing exactly what you’re about to do—tapping a button, scrolling through an app, or selecting a setting—before you even touch the screen. No more fumbling through menus or repeating actions. That’s the kind of intuitive, seamless interaction a new AI model called UI-R1 is aiming to bring to life.

Developed by researchers at Vivo Lab, MM Lab, and CUHK, UI-R1 is designed to boost how well AI agents understand and predict user actions on graphical user interfaces (GUIs). And it doesn’t just rely on massive data dumps—it gets smarter through reinforcement learning, much like how humans learn through trial and error.

From Reasoning to Real-World Action

UI-R1 builds on DeepSeek-R1, a language model trained to reason through RL. But here’s the twist: UI-R1 steps into the world of multimodal AI, which means it learns from both visual elements (like your phone screen) and language (the task instructions or context). It’s like giving the model both eyes and a brain—and teaching it to use both to figure out what to do next.

To train it, the team built a dataset of 136 complex, real-world mobile tasks (think real app interfaces, not simplified mockups), covering five common action types. Instead of relying on thousands of labeled examples, they used a rule-based reward system to guide learning—essentially showing the model what a “good” decision looks like.

They also introduced a fine-tuning technique called Group Relative Policy Optimization (GRPO), which helps the model refine its decision-making in a more structured way.

The Results? Surprisingly Smart

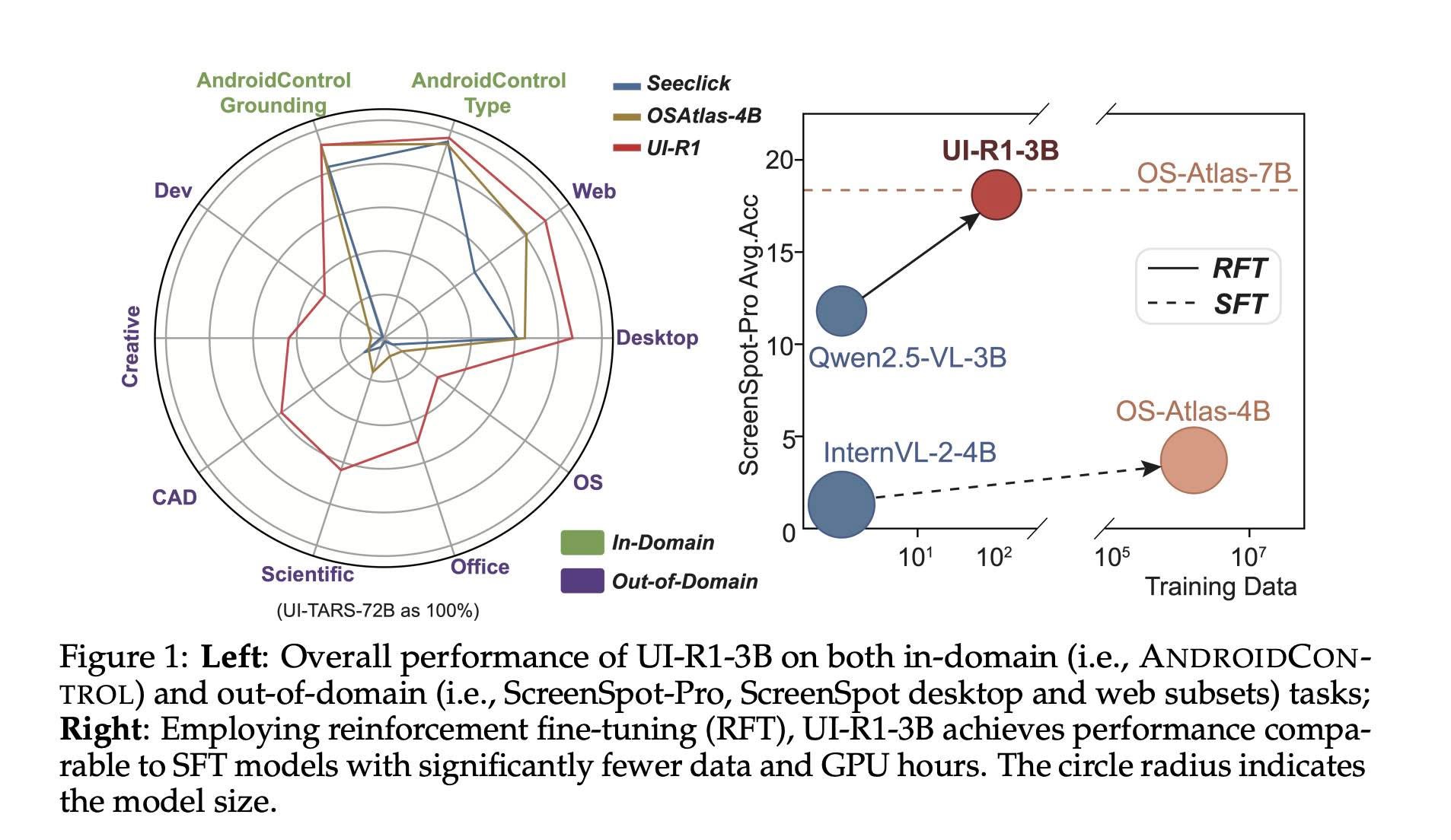

Compared to its baseline, UI-R1-3B delivered some solid gains:

• 15% better action prediction on the AndroidControl benchmark

• 10.3% improvement in identifying the right UI elements

• 6% boost in tasks it wasn’t even trained on

And here’s the kicker—it did this while being smaller than some models trained on over 76,000 data points. That’s a big deal. It shows that smart training strategies can outperform brute-force data collection.

Why This Matters

This research hints at a future where AI assistants could become far more helpful and intuitive. Whether you’re using voice commands, accessibility features, or automated testing tools, having an AI that truly “gets” the UI—and predicts what you’re about to do—opens up all kinds of possibilities.

Less friction. Fewer taps. Smarter machines.

UI-R1 is a glimpse into that future—and it’s learning fast.

Check out the full paper here:

UI-R1: Enhancing Action Prediction of GUI Agents by Reinforcement Learning

Leave a Reply