The AI Revolution You Didn’t See Coming

In the world of Artificial Intelligence (AI), a silent revolution began in 2017—and unless you’re deeply into tech, you probably missed the spark that ignited it all. But today, you’re seeing the results everywhere—from ChatGPT chatting with you (yes, hi!) to AI art, translation tools, voice assistants, and so much more.

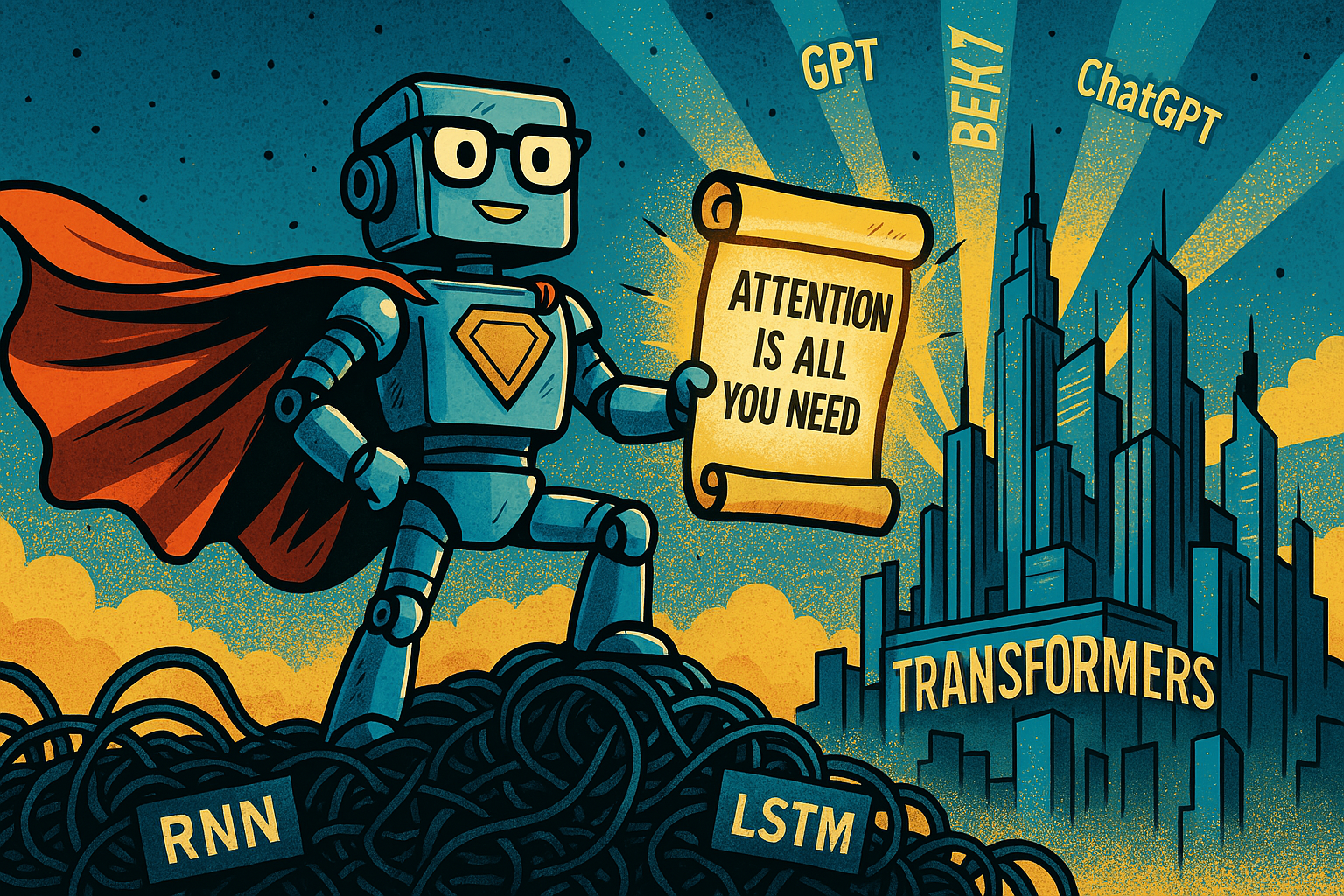

The revolution began with a bold idea written in a now-famous research paper titled “Attention is All You Need.” Sounds intense? Don’t worry, we’ll break it down.

What Came Before: The Old AI Ways

Before this paper, AI models that understood language relied on something called Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks. Think of them as trying to read a book one word at a time and remembering everything from the beginning to make sense of it. That sounds hard, right? Well, it was.

These models:

• Took a long time to train

• Were super complex

• Struggled to remember words that came much earlier in a sentence or paragraph

Basically, they were powerful—but had major memory issues and were slow learners.

The Lightbulb Moment: “What if We Just Paid Attention?”

The team of researchers from Google (including Ashish Vaswani and others) asked a simple but revolutionary question:

“What if, instead of processing every word in order, the model could just ‘pay attention’ to the important parts, no matter where they are in the sentence?”

Boom. That’s when they introduced the Transformer—a brand-new architecture that didn’t use recurrence at all. Just attention.

What Is Attention? (No Jargon, Promise)

Imagine you’re reading a paragraph and trying to figure out what “it” refers to. You scan back and realize “it” means “the cat.” That process of scanning and focusing on the relevant parts? That’s attention.

Now imagine an AI doing that—looking at all the words at once and figuring out which ones matter most when trying to understand something. That’s what the Transformer does.

It’s like giving the AI a superpower: the ability to see the whole picture at once instead of one piece at a time.

Why Was This a Game-Changer?

Here’s what changed with the Transformer architecture:

• It was faster to train because it could look at all the words at once

• It was smarter—it could understand long sentences better

• It scaled like magic—models could get bigger, better, and more powerful

This architecture became the foundation of all major AI language models we see today: GPT, BERT, T5—you name it.

Without this paper, you wouldn’t have ChatGPT helping with your emails or DALL·E creating wild AI art.

So, What’s the Big Deal Now?

Since 2017, the Transformer architecture has become the standard. It didn’t just make AI better—it made it possible for AI to become mainstream. Everything from language translation to music generation now runs on this idea.

And all it took was one breakthrough: paying attention.

Want to Dive Deeper?

You can read the original paper yourself right here (it’s surprisingly readable—even if you’re not a scientist):

Leave a Reply